flink 1.20.3下载

# 官网:

wget https://dlcdn.apache.org/flink/flink-1.20.3/flink-1.20.3-bin-scala_2.12.tgz

# 清华镜像:

wget https://mirrors.tuna.tsinghua.edu.cn/apache/flink/flink-1.20.3/flink-1.20.3-bin-scala_2.12.tgz

flink依赖hadoop

# 添加 flink 使用 hadoop的jar,我的hadoop版本为2.10

wget https://repo1.maven.org/maven2/org/apache/flink/flink-shaded-hadoop-2-uber/2.8.3-10.0/flink-shaded-hadoop-2-uber-2.8.3-10.0.jar

# 将 flink-shaded-hadoop-2-uber-2.8.3-10.0.jar 复制到 $FLINK_HOME/lib 目录下

验证是否生效:

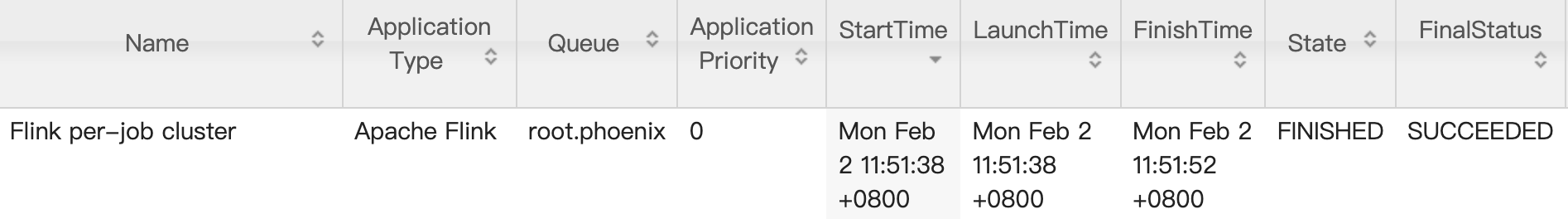

$FLINK_HOME/bin/flink run -m yarn-cluster -d $FLINK_HOME/examples/streaming/WordCount.jar可以在yarn web中查看执行情况:

flink依赖hive

# flink使用hive需要将hive相关jar放到$FLINK_HOME/lib 目录下,我的hive版本:2.3.9

jar列表:flink-sql-connector-hive-2.3.10_2.12-1.20.3.jar、hive-exec-2.3.9.jar、antlr-runtime-3.5.2.jar

# 下载地址:

wget https://repo.maven.apache.org/maven2/org/apache/flink/flink-sql-connector-hive-2.3.10_2.12/1.20.3/flink-sql-connector-hive-2.3.10_2.12-1.20.3.jar

wget https://repo1.maven.org/maven2/org/apache/hive/hive-exec/2.3.9/hive-exec-2.3.9.jar

wget https://repo1.maven.org/maven2/org/antlr/antlr-runtime/3.5.2/antlr-runtime-3.5.2.jar

flink依赖kafka

# flink kafka connector:

wget https://repo1.maven.org/maven2/org/apache/flink/flink-connector-kafka/3.4.0-1.20/flink-connector-kafka-3.4.0-1.20.jar

# kafka client:

wget https://repo1.maven.org/maven2/org/apache/kafka/kafka-clients/3.9.1/kafka-clients-3.9.1.jar

注:如果flink sql client使用了yarn session创建的应用,记得重新创建yarn应用,这样才能加载添加的jar包

评论